Initial commit

This commit is contained in:

commit

e58fa81ca6

8

.editorconfig

Normal file

8

.editorconfig

Normal file

@ -0,0 +1,8 @@

|

|||||||

|

root = true

|

||||||

|

|

||||||

|

[*]

|

||||||

|

end_of_line = lf

|

||||||

|

insert_final_newline = true

|

||||||

|

indent_size = 4

|

||||||

|

indent_style = tab

|

||||||

|

trim_trailing_whitespace = true

|

||||||

3

.flake8

Normal file

3

.flake8

Normal file

@ -0,0 +1,3 @@

|

|||||||

|

[flake8]

|

||||||

|

select = E3, E4, F

|

||||||

|

per-file-ignores = facefusion/core.py:E402,F401

|

||||||

2

.github/FUNDING.yml

vendored

Normal file

2

.github/FUNDING.yml

vendored

Normal file

@ -0,0 +1,2 @@

|

|||||||

|

github: henryruhs

|

||||||

|

custom: https://paypal.me/henryruhs

|

||||||

BIN

.github/preview.png

vendored

Normal file

BIN

.github/preview.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 1.0 MiB |

39

.github/workflows/ci.yml

vendored

Normal file

39

.github/workflows/ci.yml

vendored

Normal file

@ -0,0 +1,39 @@

|

|||||||

|

name: ci

|

||||||

|

|

||||||

|

on: [ push, pull_request ]

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

lint:

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

steps:

|

||||||

|

- name: Checkout

|

||||||

|

uses: actions/checkout@v2

|

||||||

|

- name: Set up Python 3.10

|

||||||

|

uses: actions/setup-python@v2

|

||||||

|

with:

|

||||||

|

python-version: '3.10'

|

||||||

|

- run: pip install flake8

|

||||||

|

- run: pip install mypy

|

||||||

|

- run: flake8 run.py facefusion

|

||||||

|

- run: mypy run.py facefusion

|

||||||

|

test:

|

||||||

|

strategy:

|

||||||

|

matrix:

|

||||||

|

os: [macos-latest, ubuntu-latest, windows-latest]

|

||||||

|

runs-on: ${{ matrix.os }}

|

||||||

|

steps:

|

||||||

|

- name: Checkout

|

||||||

|

uses: actions/checkout@v2

|

||||||

|

- name: Set up ffmpeg

|

||||||

|

uses: FedericoCarboni/setup-ffmpeg@v2

|

||||||

|

- name: Set up Python 3.10

|

||||||

|

uses: actions/setup-python@v2

|

||||||

|

with:

|

||||||

|

python-version: '3.10'

|

||||||

|

- run: pip install -r requirements-ci.txt

|

||||||

|

- run: curl --create-dirs --output .assets/examples/source.jpg https://huggingface.co/facefusion/examples/resolve/main/source.jpg

|

||||||

|

- run: curl --create-dirs --output .assets/examples/target-240.mp4 https://huggingface.co/facefusion/examples/resolve/main/target-240.mp4

|

||||||

|

- run: python run.py --source .assets/examples/source.jpg --target .assets/examples/target-240.mp4 --output .assets/examples

|

||||||

|

if: matrix.os != 'windows-latest'

|

||||||

|

- run: python run.py --source .assets\examples\source.jpg --target .assets\examples\target-240.mp4 --output .assets\examples

|

||||||

|

if: matrix.os == 'windows-latest'

|

||||||

3

.gitignore

vendored

Normal file

3

.gitignore

vendored

Normal file

@ -0,0 +1,3 @@

|

|||||||

|

.idea

|

||||||

|

.assets

|

||||||

|

.temp

|

||||||

78

README.md

Normal file

78

README.md

Normal file

@ -0,0 +1,78 @@

|

|||||||

|

FaceFusion

|

||||||

|

==========

|

||||||

|

|

||||||

|

> Next generation face swapper and enhancer.

|

||||||

|

|

||||||

|

[](https://github.com/facefusion/facefusion/actions?query=workflow:ci)

|

||||||

|

|

||||||

|

|

||||||

|

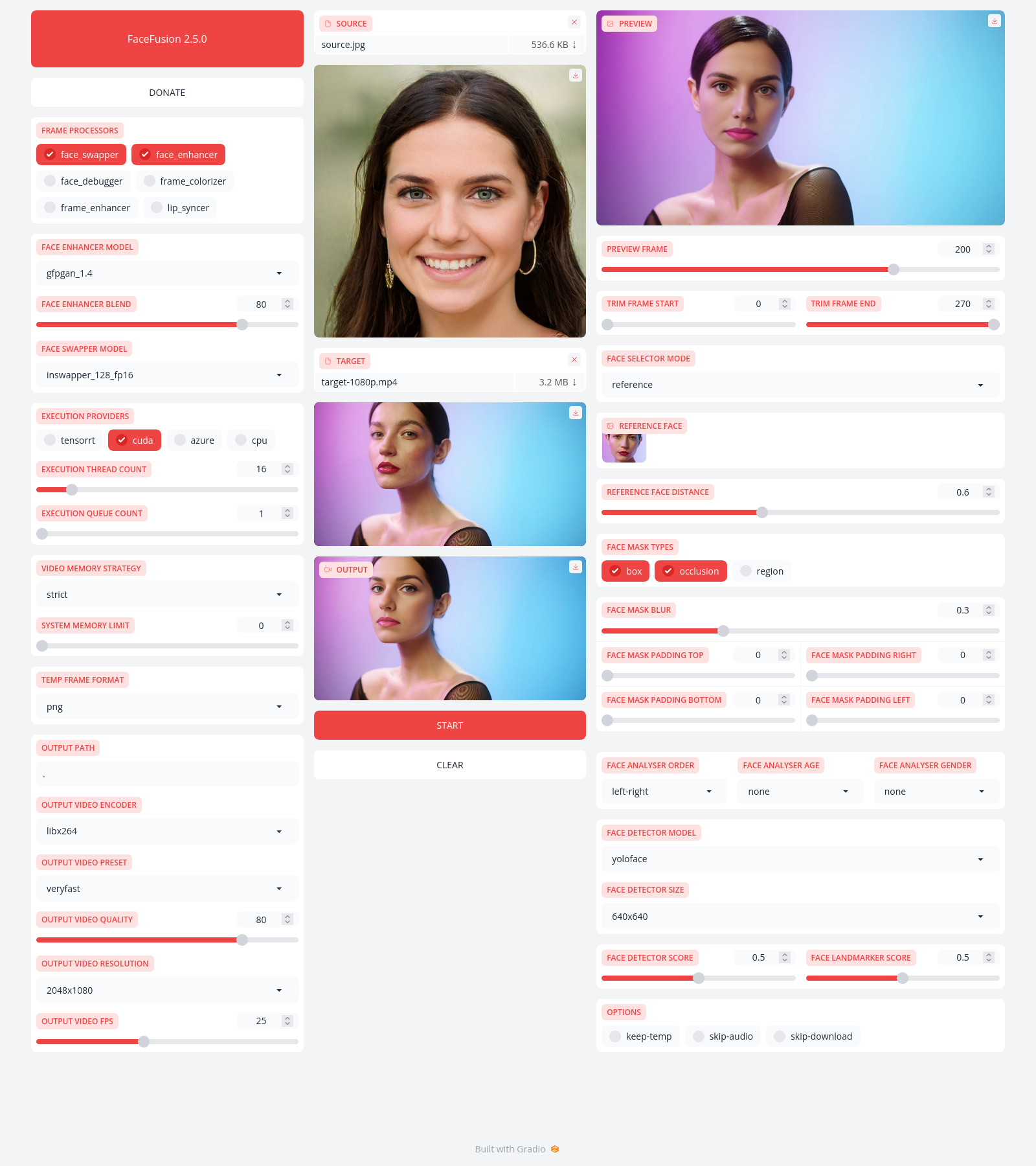

Preview

|

||||||

|

-------

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Installation

|

||||||

|

------------

|

||||||

|

|

||||||

|

Be aware, the installation needs technical skills and is not for beginners. Please do not open platform and installation related issues on GitHub. We have a very helpful [Discord](https://join.facefusion.io) community that will guide you to install FaceFusion.

|

||||||

|

|

||||||

|

[Basic](https://docs.facefusion.io/installation/basic) - It is more likely to work on your computer, but will be quite slow

|

||||||

|

|

||||||

|

[Acceleration](https://docs.facefusion.io/installation/acceleration) - Unleash the full potential of your CPU and GPU

|

||||||

|

|

||||||

|

|

||||||

|

Usage

|

||||||

|

-----

|

||||||

|

|

||||||

|

Start the program with arguments:

|

||||||

|

|

||||||

|

```

|

||||||

|

python run.py [options]

|

||||||

|

|

||||||

|

-h, --help show this help message and exit

|

||||||

|

-s SOURCE_PATH, --source SOURCE_PATH select a source image

|

||||||

|

-t TARGET_PATH, --target TARGET_PATH select a target image or video

|

||||||

|

-o OUTPUT_PATH, --output OUTPUT_PATH specify the output file or directory

|

||||||

|

--frame-processors FRAME_PROCESSORS [FRAME_PROCESSORS ...] choose from the available frame processors (choices: face_enhancer, face_swapper, frame_enhancer, ...)

|

||||||

|

--ui-layouts UI_LAYOUTS [UI_LAYOUTS ...] choose from the available ui layouts (choices: benchmark, default, ...)

|

||||||

|

--keep-fps preserve the frames per second (fps) of the target

|

||||||

|

--keep-temp retain temporary frames after processing

|

||||||

|

--skip-audio omit audio from the target

|

||||||

|

--face-recognition {reference,many} specify the method for face recognition

|

||||||

|

--face-analyser-direction {left-right,right-left,top-bottom,bottom-top,small-large,large-small} specify the direction used for face analysis

|

||||||

|

--face-analyser-age {child,teen,adult,senior} specify the age used for face analysis

|

||||||

|

--face-analyser-gender {male,female} specify the gender used for face analysis

|

||||||

|

--reference-face-position REFERENCE_FACE_POSITION specify the position of the reference face

|

||||||

|

--reference-face-distance REFERENCE_FACE_DISTANCE specify the distance between the reference face and the target face

|

||||||

|

--reference-frame-number REFERENCE_FRAME_NUMBER specify the number of the reference frame

|

||||||

|

--trim-frame-start TRIM_FRAME_START specify the start frame for extraction

|

||||||

|

--trim-frame-end TRIM_FRAME_END specify the end frame for extraction

|

||||||

|

--temp-frame-format {jpg,png} specify the image format used for frame extraction

|

||||||

|

--temp-frame-quality [0-100] specify the image quality used for frame extraction

|

||||||

|

--output-video-encoder {libx264,libx265,libvpx-vp9,h264_nvenc,hevc_nvenc} specify the encoder used for the output video

|

||||||

|

--output-video-quality [0-100] specify the quality used for the output video

|

||||||

|

--max-memory MAX_MEMORY specify the maximum amount of ram to be used (in gb)

|

||||||

|

--execution-providers {tensorrt,cuda,cpu} [{tensorrt,cuda,cpu} ...] Choose from the available execution providers (choices: cpu, ...)

|

||||||

|

--execution-thread-count EXECUTION_THREAD_COUNT Specify the number of execution threads

|

||||||

|

--execution-queue-count EXECUTION_QUEUE_COUNT Specify the number of execution queries

|

||||||

|

-v, --version show program's version number and exit

|

||||||

|

```

|

||||||

|

|

||||||

|

Using the `-s/--source`, `-t/--target` and `-o/--output` argument will run the program in headless mode.

|

||||||

|

|

||||||

|

|

||||||

|

Disclaimer

|

||||||

|

----------

|

||||||

|

|

||||||

|

This software is meant to be a productive contribution to the rapidly growing AI-generated media industry. It will help artists with tasks such as animating a custom character or using the character as a model for clothing etc.

|

||||||

|

|

||||||

|

The developers of this software are aware of its possible unethical applications and are committed to take preventative measures against them. It has a built-in check which prevents the program from working on inappropriate media including but not limited to nudity, graphic content, sensitive material such as war footage etc. We will continue to develop this project in the positive direction while adhering to law and ethics. This project may be shut down or include watermarks on the output if requested by law.

|

||||||

|

|

||||||

|

Users of this software are expected to use this software responsibly while abiding the local law. If face of a real person is being used, users are suggested to get consent from the concerned person and clearly mention that it is a deepfake when posting content online. Developers of this software will not be responsible for actions of end-users.

|

||||||

|

|

||||||

|

|

||||||

|

Documentation

|

||||||

|

-------------

|

||||||

|

|

||||||

|

Read the [documenation](https://docs.facefusion.io) for a deep dive.

|

||||||

0

facefusion/__init__.py

Normal file

0

facefusion/__init__.py

Normal file

22

facefusion/capturer.py

Normal file

22

facefusion/capturer.py

Normal file

@ -0,0 +1,22 @@

|

|||||||

|

from typing import Optional

|

||||||

|

import cv2

|

||||||

|

|

||||||

|

from facefusion.typing import Frame

|

||||||

|

|

||||||

|

|

||||||

|

def get_video_frame(video_path : str, frame_number : int = 0) -> Optional[Frame]:

|

||||||

|

capture = cv2.VideoCapture(video_path)

|

||||||

|

frame_total = capture.get(cv2.CAP_PROP_FRAME_COUNT)

|

||||||

|

capture.set(cv2.CAP_PROP_POS_FRAMES, min(frame_total, frame_number - 1))

|

||||||

|

has_frame, frame = capture.read()

|

||||||

|

capture.release()

|

||||||

|

if has_frame:

|

||||||

|

return frame

|

||||||

|

return None

|

||||||

|

|

||||||

|

|

||||||

|

def get_video_frame_total(video_path : str) -> int:

|

||||||

|

capture = cv2.VideoCapture(video_path)

|

||||||

|

video_frame_total = int(capture.get(cv2.CAP_PROP_FRAME_COUNT))

|

||||||

|

capture.release()

|

||||||

|

return video_frame_total

|

||||||

10

facefusion/choices.py

Normal file

10

facefusion/choices.py

Normal file

@ -0,0 +1,10 @@

|

|||||||

|

from typing import List

|

||||||

|

|

||||||

|

from facefusion.typing import FaceRecognition, FaceAnalyserDirection, FaceAnalyserAge, FaceAnalyserGender, TempFrameFormat, OutputVideoEncoder

|

||||||

|

|

||||||

|

face_recognition : List[FaceRecognition] = [ 'reference', 'many' ]

|

||||||

|

face_analyser_direction : List[FaceAnalyserDirection] = [ 'left-right', 'right-left', 'top-bottom', 'bottom-top', 'small-large', 'large-small']

|

||||||

|

face_analyser_age : List[FaceAnalyserAge] = [ 'child', 'teen', 'adult', 'senior' ]

|

||||||

|

face_analyser_gender : List[FaceAnalyserGender] = [ 'male', 'female' ]

|

||||||

|

temp_frame_format : List[TempFrameFormat] = [ 'jpg', 'png' ]

|

||||||

|

output_video_encoder : List[OutputVideoEncoder] = [ 'libx264', 'libx265', 'libvpx-vp9', 'h264_nvenc', 'hevc_nvenc' ]

|

||||||

234

facefusion/core.py

Executable file

234

facefusion/core.py

Executable file

@ -0,0 +1,234 @@

|

|||||||

|

#!/usr/bin/env python3

|

||||||

|

|

||||||

|

import os

|

||||||

|

# single thread doubles cuda performance

|

||||||

|

os.environ['OMP_NUM_THREADS'] = '1'

|

||||||

|

# reduce tensorflow log level

|

||||||

|

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

|

||||||

|

import sys

|

||||||

|

import warnings

|

||||||

|

from typing import List

|

||||||

|

import platform

|

||||||

|

import signal

|

||||||

|

import shutil

|

||||||

|

import argparse

|

||||||

|

import onnxruntime

|

||||||

|

import tensorflow

|

||||||

|

|

||||||

|

import facefusion.choices

|

||||||

|

import facefusion.globals

|

||||||

|

from facefusion import wording, metadata

|

||||||

|

from facefusion.predictor import predict_image, predict_video

|

||||||

|

from facefusion.processors.frame.core import get_frame_processors_modules

|

||||||

|

from facefusion.utilities import is_image, is_video, detect_fps, create_video, extract_frames, get_temp_frame_paths, restore_audio, create_temp, move_temp, clean_temp, normalize_output_path, list_module_names, decode_execution_providers, encode_execution_providers

|

||||||

|

|

||||||

|

warnings.filterwarnings('ignore', category = FutureWarning, module = 'insightface')

|

||||||

|

warnings.filterwarnings('ignore', category = UserWarning, module = 'torchvision')

|

||||||

|

|

||||||

|

|

||||||

|

def parse_args() -> None:

|

||||||

|

signal.signal(signal.SIGINT, lambda signal_number, frame: destroy())

|

||||||

|

program = argparse.ArgumentParser(formatter_class = lambda prog: argparse.HelpFormatter(prog, max_help_position = 120))

|

||||||

|

program.add_argument('-s', '--source', help = wording.get('source_help'), dest = 'source_path')

|

||||||

|

program.add_argument('-t', '--target', help = wording.get('target_help'), dest = 'target_path')

|

||||||

|

program.add_argument('-o', '--output', help = wording.get('output_help'), dest = 'output_path')

|

||||||

|

program.add_argument('--frame-processors', help = wording.get('frame_processors_help').format(choices = ', '.join(list_module_names('facefusion/processors/frame/modules'))), dest = 'frame_processors', default = ['face_swapper'], nargs='+')

|

||||||

|

program.add_argument('--ui-layouts', help = wording.get('ui_layouts_help').format(choices = ', '.join(list_module_names('facefusion/uis/layouts'))), dest = 'ui_layouts', default = ['default'], nargs='+')

|

||||||

|

program.add_argument('--keep-fps', help = wording.get('keep_fps_help'), dest = 'keep_fps', action='store_true')

|

||||||

|

program.add_argument('--keep-temp', help = wording.get('keep_temp_help'), dest = 'keep_temp', action='store_true')

|

||||||

|

program.add_argument('--skip-audio', help = wording.get('skip_audio_help'), dest = 'skip_audio', action='store_true')

|

||||||

|

program.add_argument('--face-recognition', help = wording.get('face_recognition_help'), dest = 'face_recognition', default = 'reference', choices = facefusion.choices.face_recognition)

|

||||||

|

program.add_argument('--face-analyser-direction', help = wording.get('face_analyser_direction_help'), dest = 'face_analyser_direction', default = 'left-right', choices = facefusion.choices.face_analyser_direction)

|

||||||

|

program.add_argument('--face-analyser-age', help = wording.get('face_analyser_age_help'), dest = 'face_analyser_age', choices = facefusion.choices.face_analyser_age)

|

||||||

|

program.add_argument('--face-analyser-gender', help = wording.get('face_analyser_gender_help'), dest = 'face_analyser_gender', choices = facefusion.choices.face_analyser_gender)

|

||||||

|

program.add_argument('--reference-face-position', help = wording.get('reference_face_position_help'), dest = 'reference_face_position', type = int, default = 0)

|

||||||

|

program.add_argument('--reference-face-distance', help = wording.get('reference_face_distance_help'), dest = 'reference_face_distance', type = float, default = 1.5)

|

||||||

|

program.add_argument('--reference-frame-number', help = wording.get('reference_frame_number_help'), dest = 'reference_frame_number', type = int, default = 0)

|

||||||

|

program.add_argument('--trim-frame-start', help = wording.get('trim_frame_start_help'), dest = 'trim_frame_start', type = int)

|

||||||

|

program.add_argument('--trim-frame-end', help = wording.get('trim_frame_end_help'), dest = 'trim_frame_end', type = int)

|

||||||

|

program.add_argument('--temp-frame-format', help = wording.get('temp_frame_format_help'), dest = 'temp_frame_format', default = 'jpg', choices = facefusion.choices.temp_frame_format)

|

||||||

|

program.add_argument('--temp-frame-quality', help = wording.get('temp_frame_quality_help'), dest = 'temp_frame_quality', type = int, default = 100, choices = range(101), metavar = '[0-100]')

|

||||||

|

program.add_argument('--output-video-encoder', help = wording.get('output_video_encoder_help'), dest = 'output_video_encoder', default = 'libx264', choices = facefusion.choices.output_video_encoder)

|

||||||

|

program.add_argument('--output-video-quality', help = wording.get('output_video_quality_help'), dest = 'output_video_quality', type = int, default = 90, choices = range(101), metavar = '[0-100]')

|

||||||

|

program.add_argument('--max-memory', help = wording.get('max_memory_help'), dest = 'max_memory', type = int)

|

||||||

|

program.add_argument('--execution-providers', help = wording.get('execution_providers_help').format(choices = 'cpu'), dest = 'execution_providers', default = ['cpu'], choices = suggest_execution_providers_choices(), nargs='+')

|

||||||

|

program.add_argument('--execution-thread-count', help = wording.get('execution_thread_count_help'), dest = 'execution_thread_count', type = int, default = suggest_execution_thread_count_default())

|

||||||

|

program.add_argument('--execution-queue-count', help = wording.get('execution_queue_count_help'), dest = 'execution_queue_count', type = int, default = 1)

|

||||||

|

program.add_argument('-v', '--version', action='version', version = metadata.get('name') + ' ' + metadata.get('version'))

|

||||||

|

|

||||||

|

args = program.parse_args()

|

||||||

|

|

||||||

|

facefusion.globals.source_path = args.source_path

|

||||||

|

facefusion.globals.target_path = args.target_path

|

||||||

|

facefusion.globals.output_path = normalize_output_path(facefusion.globals.source_path, facefusion.globals.target_path, args.output_path)

|

||||||

|

facefusion.globals.headless = facefusion.globals.source_path is not None and facefusion.globals.target_path is not None and facefusion.globals.output_path is not None

|

||||||

|

facefusion.globals.frame_processors = args.frame_processors

|

||||||

|

facefusion.globals.ui_layouts = args.ui_layouts

|

||||||

|

facefusion.globals.keep_fps = args.keep_fps

|

||||||

|

facefusion.globals.keep_temp = args.keep_temp

|

||||||

|

facefusion.globals.skip_audio = args.skip_audio

|

||||||

|

facefusion.globals.face_recognition = args.face_recognition

|

||||||

|

facefusion.globals.face_analyser_direction = args.face_analyser_direction

|

||||||

|

facefusion.globals.face_analyser_age = args.face_analyser_age

|

||||||

|

facefusion.globals.face_analyser_gender = args.face_analyser_gender

|

||||||

|

facefusion.globals.reference_face_position = args.reference_face_position

|

||||||

|

facefusion.globals.reference_frame_number = args.reference_frame_number

|

||||||

|

facefusion.globals.reference_face_distance = args.reference_face_distance

|

||||||

|

facefusion.globals.trim_frame_start = args.trim_frame_start

|

||||||

|

facefusion.globals.trim_frame_end = args.trim_frame_end

|

||||||

|

facefusion.globals.temp_frame_format = args.temp_frame_format

|

||||||

|

facefusion.globals.temp_frame_quality = args.temp_frame_quality

|

||||||

|

facefusion.globals.output_video_encoder = args.output_video_encoder

|

||||||

|

facefusion.globals.output_video_quality = args.output_video_quality

|

||||||

|

facefusion.globals.max_memory = args.max_memory

|

||||||

|

facefusion.globals.execution_providers = decode_execution_providers(args.execution_providers)

|

||||||

|

facefusion.globals.execution_thread_count = args.execution_thread_count

|

||||||

|

facefusion.globals.execution_queue_count = args.execution_queue_count

|

||||||

|

|

||||||

|

|

||||||

|

def suggest_execution_providers_choices() -> List[str]:

|

||||||

|

return encode_execution_providers(onnxruntime.get_available_providers())

|

||||||

|

|

||||||

|

|

||||||

|

def suggest_execution_thread_count_default() -> int:

|

||||||

|

if 'CUDAExecutionProvider' in onnxruntime.get_available_providers():

|

||||||

|

return 8

|

||||||

|

return 1

|

||||||

|

|

||||||

|

|

||||||

|

def limit_resources() -> None:

|

||||||

|

# prevent tensorflow memory leak

|

||||||

|

gpus = tensorflow.config.experimental.list_physical_devices('GPU')

|

||||||

|

for gpu in gpus:

|

||||||

|

tensorflow.config.experimental.set_virtual_device_configuration(gpu, [

|

||||||

|

tensorflow.config.experimental.VirtualDeviceConfiguration(memory_limit = 1024)

|

||||||

|

])

|

||||||

|

# limit memory usage

|

||||||

|

if facefusion.globals.max_memory:

|

||||||

|

memory = facefusion.globals.max_memory * 1024 ** 3

|

||||||

|

if platform.system().lower() == 'darwin':

|

||||||

|

memory = facefusion.globals.max_memory * 1024 ** 6

|

||||||

|

if platform.system().lower() == 'windows':

|

||||||

|

import ctypes

|

||||||

|

kernel32 = ctypes.windll.kernel32 # type: ignore[attr-defined]

|

||||||

|

kernel32.SetProcessWorkingSetSize(-1, ctypes.c_size_t(memory), ctypes.c_size_t(memory))

|

||||||

|

else:

|

||||||

|

import resource

|

||||||

|

resource.setrlimit(resource.RLIMIT_DATA, (memory, memory))

|

||||||

|

|

||||||

|

|

||||||

|

def update_status(message : str, scope : str = 'FACEFUSION.CORE') -> None:

|

||||||

|

print('[' + scope + '] ' + message)

|

||||||

|

|

||||||

|

|

||||||

|

def pre_check() -> bool:

|

||||||

|

if sys.version_info < (3, 10):

|

||||||

|

update_status(wording.get('python_not_supported').format(version = '3.10'))

|

||||||

|

return False

|

||||||

|

if not shutil.which('ffmpeg'):

|

||||||

|

update_status(wording.get('ffmpeg_not_installed'))

|

||||||

|

return False

|

||||||

|

return True

|

||||||

|

|

||||||

|

|

||||||

|

def process_image() -> None:

|

||||||

|

if predict_image(facefusion.globals.target_path):

|

||||||

|

return

|

||||||

|

shutil.copy2(facefusion.globals.target_path, facefusion.globals.output_path)

|

||||||

|

# process frame

|

||||||

|

for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

|

||||||

|

update_status(wording.get('processing'), frame_processor_module.NAME)

|

||||||

|

frame_processor_module.process_image(facefusion.globals.source_path, facefusion.globals.output_path, facefusion.globals.output_path)

|

||||||

|

frame_processor_module.post_process()

|

||||||

|

# validate image

|

||||||

|

if is_image(facefusion.globals.target_path):

|

||||||

|

update_status(wording.get('processing_image_succeed'))

|

||||||

|

else:

|

||||||

|

update_status(wording.get('processing_image_failed'))

|

||||||

|

|

||||||

|

|

||||||

|

def process_video() -> None:

|

||||||

|

if predict_video(facefusion.globals.target_path):

|

||||||

|

return

|

||||||

|

update_status(wording.get('creating_temp'))

|

||||||

|

create_temp(facefusion.globals.target_path)

|

||||||

|

# extract frames

|

||||||

|

if facefusion.globals.keep_fps:

|

||||||

|

fps = detect_fps(facefusion.globals.target_path)

|

||||||

|

update_status(wording.get('extracting_frames_fps').format(fps = fps))

|

||||||

|

extract_frames(facefusion.globals.target_path, fps)

|

||||||

|

else:

|

||||||

|

update_status(wording.get('extracting_frames_fps').format(fps = 30))

|

||||||

|

extract_frames(facefusion.globals.target_path)

|

||||||

|

# process frame

|

||||||

|

temp_frame_paths = get_temp_frame_paths(facefusion.globals.target_path)

|

||||||

|

if temp_frame_paths:

|

||||||

|

for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

|

||||||

|

update_status(wording.get('processing'), frame_processor_module.NAME)

|

||||||

|

frame_processor_module.process_video(facefusion.globals.source_path, temp_frame_paths)

|

||||||

|

frame_processor_module.post_process()

|

||||||

|

else:

|

||||||

|

update_status(wording.get('temp_frames_not_found'))

|

||||||

|

return

|

||||||

|

# create video

|

||||||

|

if facefusion.globals.keep_fps:

|

||||||

|

fps = detect_fps(facefusion.globals.target_path)

|

||||||

|

update_status(wording.get('creating_video_fps').format(fps = fps))

|

||||||

|

if not create_video(facefusion.globals.target_path, fps):

|

||||||

|

update_status(wording.get('creating_video_failed'))

|

||||||

|

else:

|

||||||

|

update_status(wording.get('creating_video_fps').format(fps = 30))

|

||||||

|

if not create_video(facefusion.globals.target_path):

|

||||||

|

update_status(wording.get('creating_video_failed'))

|

||||||

|

# handle audio

|

||||||

|

if facefusion.globals.skip_audio:

|

||||||

|

move_temp(facefusion.globals.target_path, facefusion.globals.output_path)

|

||||||

|

update_status(wording.get('skipping_audio'))

|

||||||

|

else:

|

||||||

|

if facefusion.globals.keep_fps:

|

||||||

|

update_status(wording.get('restoring_audio'))

|

||||||

|

else:

|

||||||

|

update_status(wording.get('restoring_audio_issues'))

|

||||||

|

restore_audio(facefusion.globals.target_path, facefusion.globals.output_path)

|

||||||

|

# clean temp

|

||||||

|

update_status(wording.get('cleaning_temp'))

|

||||||

|

clean_temp(facefusion.globals.target_path)

|

||||||

|

# validate video

|

||||||

|

if is_video(facefusion.globals.target_path):

|

||||||

|

update_status(wording.get('processing_video_succeed'))

|

||||||

|

else:

|

||||||

|

update_status(wording.get('processing_video_failed'))

|

||||||

|

|

||||||

|

|

||||||

|

def conditional_process() -> None:

|

||||||

|

for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

|

||||||

|

if not frame_processor_module.pre_process():

|

||||||

|

return

|

||||||

|

if is_image(facefusion.globals.target_path):

|

||||||

|

process_image()

|

||||||

|

if is_video(facefusion.globals.target_path):

|

||||||

|

process_video()

|

||||||

|

|

||||||

|

|

||||||

|

def run() -> None:

|

||||||

|

parse_args()

|

||||||

|

limit_resources()

|

||||||

|

# pre check

|

||||||

|

if not pre_check():

|

||||||

|

return

|

||||||

|

for frame_processor in get_frame_processors_modules(facefusion.globals.frame_processors):

|

||||||

|

if not frame_processor.pre_check():

|

||||||

|

return

|

||||||

|

# process or launch

|

||||||

|

if facefusion.globals.headless:

|

||||||

|

conditional_process()

|

||||||

|

else:

|

||||||

|

import facefusion.uis.core as ui

|

||||||

|

|

||||||

|

ui.launch()

|

||||||

|

|

||||||

|

|

||||||

|

def destroy() -> None:

|

||||||

|

if facefusion.globals.target_path:

|

||||||

|

clean_temp(facefusion.globals.target_path)

|

||||||

|

sys.exit()

|

||||||

106

facefusion/face_analyser.py

Normal file

106

facefusion/face_analyser.py

Normal file

@ -0,0 +1,106 @@

|

|||||||

|

import threading

|

||||||

|

from typing import Any, Optional, List

|

||||||

|

import insightface

|

||||||

|

import numpy

|

||||||

|

|

||||||

|

import facefusion.globals

|

||||||

|

from facefusion.typing import Frame, Face, FaceAnalyserDirection, FaceAnalyserAge, FaceAnalyserGender

|

||||||

|

|

||||||

|

FACE_ANALYSER = None

|

||||||

|

THREAD_LOCK = threading.Lock()

|

||||||

|

|

||||||

|

|

||||||

|

def get_face_analyser() -> Any:

|

||||||

|

global FACE_ANALYSER

|

||||||

|

|

||||||

|

with THREAD_LOCK:

|

||||||

|

if FACE_ANALYSER is None:

|

||||||

|

FACE_ANALYSER = insightface.app.FaceAnalysis(name = 'buffalo_l', providers = facefusion.globals.execution_providers)

|

||||||

|

FACE_ANALYSER.prepare(ctx_id = 0)

|

||||||

|

return FACE_ANALYSER

|

||||||

|

|

||||||

|

|

||||||

|

def clear_face_analyser() -> Any:

|

||||||

|

global FACE_ANALYSER

|

||||||

|

|

||||||

|

FACE_ANALYSER = None

|

||||||

|

|

||||||

|

|

||||||

|

def get_one_face(frame : Frame, position : int = 0) -> Optional[Face]:

|

||||||

|

many_faces = get_many_faces(frame)

|

||||||

|

if many_faces:

|

||||||

|

try:

|

||||||

|

return many_faces[position]

|

||||||

|

except IndexError:

|

||||||

|

return many_faces[-1]

|

||||||

|

return None

|

||||||

|

|

||||||

|

|

||||||

|

def get_many_faces(frame : Frame) -> List[Face]:

|

||||||

|

try:

|

||||||

|

faces = get_face_analyser().get(frame)

|

||||||

|

if facefusion.globals.face_analyser_direction:

|

||||||

|

faces = sort_by_direction(faces, facefusion.globals.face_analyser_direction)

|

||||||

|

if facefusion.globals.face_analyser_age:

|

||||||

|

faces = filter_by_age(faces, facefusion.globals.face_analyser_age)

|

||||||

|

if facefusion.globals.face_analyser_gender:

|

||||||

|

faces = filter_by_gender(faces, facefusion.globals.face_analyser_gender)

|

||||||

|

return faces

|

||||||

|

except (AttributeError, ValueError):

|

||||||

|

return []

|

||||||

|

|

||||||

|

|

||||||

|

def find_similar_faces(frame : Frame, reference_face : Face, face_distance : float) -> List[Face]:

|

||||||

|

many_faces = get_many_faces(frame)

|

||||||

|

similar_faces = []

|

||||||

|

if many_faces:

|

||||||

|

for face in many_faces:

|

||||||

|

if hasattr(face, 'normed_embedding') and hasattr(reference_face, 'normed_embedding'):

|

||||||

|

current_face_distance = numpy.sum(numpy.square(face.normed_embedding - reference_face.normed_embedding))

|

||||||

|

if current_face_distance < face_distance:

|

||||||

|

similar_faces.append(face)

|

||||||

|

return similar_faces

|

||||||

|

|

||||||

|

|

||||||

|

def sort_by_direction(faces : List[Face], direction : FaceAnalyserDirection) -> List[Face]:

|

||||||

|

if direction == 'left-right':

|

||||||

|

return sorted(faces, key = lambda face: face['bbox'][0])

|

||||||

|

if direction == 'right-left':

|

||||||

|

return sorted(faces, key = lambda face: face['bbox'][0], reverse = True)

|

||||||

|

if direction == 'top-bottom':

|

||||||

|

return sorted(faces, key = lambda face: face['bbox'][1])

|

||||||

|

if direction == 'bottom-top':

|

||||||

|

return sorted(faces, key = lambda face: face['bbox'][1], reverse = True)

|

||||||

|

if direction == 'small-large':

|

||||||

|

return sorted(faces, key = lambda face: (face['bbox'][2] - face['bbox'][0]) * (face['bbox'][3] - face['bbox'][1]))

|

||||||

|

if direction == 'large-small':

|

||||||

|

return sorted(faces, key = lambda face: (face['bbox'][2] - face['bbox'][0]) * (face['bbox'][3] - face['bbox'][1]), reverse = True)

|

||||||

|

return faces

|

||||||

|

|

||||||

|

|

||||||

|

def filter_by_age(faces : List[Face], age : FaceAnalyserAge) -> List[Face]:

|

||||||

|

filter_faces = []

|

||||||

|

for face in faces:

|

||||||

|

if face['age'] < 13 and age == 'child':

|

||||||

|

filter_faces.append(face)

|

||||||

|

elif face['age'] < 19 and age == 'teen':

|

||||||

|

filter_faces.append(face)

|

||||||

|

elif face['age'] < 60 and age == 'adult':

|

||||||

|

filter_faces.append(face)

|

||||||

|

elif face['age'] > 59 and age == 'senior':

|

||||||

|

filter_faces.append(face)

|

||||||

|

return filter_faces

|

||||||

|

|

||||||

|

|

||||||

|

def filter_by_gender(faces : List[Face], gender : FaceAnalyserGender) -> List[Face]:

|

||||||

|

filter_faces = []

|

||||||

|

for face in faces:

|

||||||

|

if face['gender'] == 1 and gender == 'male':

|

||||||

|

filter_faces.append(face)

|

||||||

|

if face['gender'] == 0 and gender == 'female':

|

||||||

|

filter_faces.append(face)

|

||||||

|

return filter_faces

|

||||||

|

|

||||||

|

|

||||||

|

def get_faces_total(frame : Frame) -> int:

|

||||||

|

return len(get_many_faces(frame))

|

||||||

21

facefusion/face_reference.py

Normal file

21

facefusion/face_reference.py

Normal file

@ -0,0 +1,21 @@

|

|||||||

|

from typing import Optional

|

||||||

|

|

||||||

|

from facefusion.typing import Face

|

||||||

|

|

||||||

|

FACE_REFERENCE = None

|

||||||

|

|

||||||

|

|

||||||

|

def get_face_reference() -> Optional[Face]:

|

||||||

|

return FACE_REFERENCE

|

||||||

|

|

||||||

|

|

||||||

|

def set_face_reference(face : Face) -> None:

|

||||||

|

global FACE_REFERENCE

|

||||||

|

|

||||||

|

FACE_REFERENCE = face

|

||||||

|

|

||||||

|

|

||||||

|

def clear_face_reference() -> None:

|

||||||

|

global FACE_REFERENCE

|

||||||

|

|

||||||

|

FACE_REFERENCE = None

|

||||||

30

facefusion/globals.py

Normal file

30

facefusion/globals.py

Normal file

@ -0,0 +1,30 @@

|

|||||||

|

from typing import List, Optional

|

||||||

|

|

||||||

|

from facefusion.typing import FaceRecognition, FaceAnalyserDirection, FaceAnalyserAge, FaceAnalyserGender, TempFrameFormat

|

||||||

|

|

||||||

|

source_path : Optional[str] = None

|

||||||

|

target_path : Optional[str] = None

|

||||||

|

output_path : Optional[str] = None

|

||||||

|

headless : Optional[bool] = None

|

||||||

|

frame_processors : List[str] = []

|

||||||

|

ui_layouts : List[str] = []

|

||||||

|

keep_fps : Optional[bool] = None

|

||||||

|

keep_temp : Optional[bool] = None

|

||||||

|

skip_audio : Optional[bool] = None

|

||||||

|

face_recognition : Optional[FaceRecognition] = None

|

||||||

|

face_analyser_direction : Optional[FaceAnalyserDirection] = None

|

||||||

|

face_analyser_age : Optional[FaceAnalyserAge] = None

|

||||||

|

face_analyser_gender : Optional[FaceAnalyserGender] = None

|

||||||

|

reference_face_position : Optional[int] = None

|

||||||

|

reference_frame_number : Optional[int] = None

|

||||||

|

reference_face_distance : Optional[float] = None

|

||||||

|

trim_frame_start : Optional[int] = None

|

||||||

|

trim_frame_end : Optional[int] = None

|

||||||

|

temp_frame_format : Optional[TempFrameFormat] = None

|

||||||

|

temp_frame_quality : Optional[int] = None

|

||||||

|

output_video_encoder : Optional[str] = None

|

||||||

|

output_video_quality : Optional[int] = None

|

||||||

|

max_memory : Optional[int] = None

|

||||||

|

execution_providers : List[str] = []

|

||||||

|

execution_thread_count : Optional[int] = None

|

||||||

|

execution_queue_count : Optional[int] = None

|

||||||

18

facefusion/metadata.py

Normal file

18

facefusion/metadata.py

Normal file

@ -0,0 +1,18 @@

|

|||||||

|

name = 'FaceFusion'

|

||||||

|

version = '1.0.0-beta'

|

||||||

|

website = 'https://facefusion.io'

|

||||||

|

|

||||||

|

|

||||||

|

METADATA =\

|

||||||

|

{

|

||||||

|

'name': 'FaceFusion',

|

||||||

|

'description': 'Next generation face swapper and enhancer',

|

||||||

|

'version': '1.0.0-beta',

|

||||||

|

'license': 'MIT',

|

||||||

|

'author': 'Henry Ruhs',

|

||||||

|

'url': 'https://facefusion.io'

|

||||||

|

}

|

||||||

|

|

||||||

|

|

||||||

|

def get(key : str) -> str:

|

||||||

|

return METADATA[key]

|

||||||

43

facefusion/predictor.py

Normal file

43

facefusion/predictor.py

Normal file

@ -0,0 +1,43 @@

|

|||||||

|

import threading

|

||||||

|

import numpy

|

||||||

|

import opennsfw2

|

||||||

|

from PIL import Image

|

||||||

|

from keras import Model

|

||||||

|

|

||||||

|

from facefusion.typing import Frame

|

||||||

|

|

||||||

|

PREDICTOR = None

|

||||||

|

THREAD_LOCK = threading.Lock()

|

||||||

|

MAX_PROBABILITY = 0.75

|

||||||

|

|

||||||

|

|

||||||

|

def get_predictor() -> Model:

|

||||||

|

global PREDICTOR

|

||||||

|

|

||||||

|

with THREAD_LOCK:

|

||||||

|

if PREDICTOR is None:

|

||||||

|

PREDICTOR = opennsfw2.make_open_nsfw_model()

|

||||||

|

return PREDICTOR

|

||||||

|

|

||||||

|

|

||||||

|

def clear_predictor() -> None:

|

||||||

|

global PREDICTOR

|

||||||

|

|

||||||

|

PREDICTOR = None

|

||||||

|

|

||||||

|

|

||||||

|

def predict_frame(target_frame : Frame) -> bool:

|

||||||

|

image = Image.fromarray(target_frame)

|

||||||

|

image = opennsfw2.preprocess_image(image, opennsfw2.Preprocessing.YAHOO)

|

||||||

|

views = numpy.expand_dims(image, axis = 0)

|

||||||

|

_, probability = get_predictor().predict(views)[0]

|

||||||

|

return probability > MAX_PROBABILITY

|

||||||

|

|

||||||

|

|

||||||

|

def predict_image(target_path : str) -> bool:

|

||||||

|

return opennsfw2.predict_image(target_path) > MAX_PROBABILITY

|

||||||

|

|

||||||

|

|

||||||

|

def predict_video(target_path : str) -> bool:

|

||||||

|

_, probabilities = opennsfw2.predict_video_frames(video_path = target_path, frame_interval = 100)

|

||||||

|

return any(probability > MAX_PROBABILITY for probability in probabilities)

|

||||||

0

facefusion/processors/__init__.py

Normal file

0

facefusion/processors/__init__.py

Normal file

0

facefusion/processors/frame/__init__.py

Normal file

0

facefusion/processors/frame/__init__.py

Normal file

113

facefusion/processors/frame/core.py

Normal file

113

facefusion/processors/frame/core.py

Normal file

@ -0,0 +1,113 @@

|

|||||||

|

import os

|

||||||

|

import sys

|

||||||

|

import importlib

|

||||||

|

import psutil

|

||||||

|

from concurrent.futures import ThreadPoolExecutor, as_completed

|

||||||

|

from queue import Queue

|

||||||

|

from types import ModuleType

|

||||||

|

from typing import Any, List, Callable

|

||||||

|

from tqdm import tqdm

|

||||||

|

|

||||||

|

import facefusion.globals

|

||||||

|

from facefusion import wording

|

||||||

|

|

||||||

|

FRAME_PROCESSORS_MODULES : List[ModuleType] = []

|

||||||

|

FRAME_PROCESSORS_METHODS =\

|

||||||

|

[

|

||||||

|

'get_frame_processor',

|

||||||

|

'clear_frame_processor',

|

||||||

|

'pre_check',

|

||||||

|

'pre_process',

|

||||||

|

'process_frame',

|

||||||

|

'process_frames',

|

||||||

|

'process_image',

|

||||||

|

'process_video',

|

||||||

|

'post_process'

|

||||||

|

]

|

||||||

|

|

||||||

|

|

||||||

|

def load_frame_processor_module(frame_processor : str) -> Any:

|

||||||

|

try:

|

||||||

|

frame_processor_module = importlib.import_module('facefusion.processors.frame.modules.' + frame_processor)

|

||||||

|

for method_name in FRAME_PROCESSORS_METHODS:

|

||||||

|

if not hasattr(frame_processor_module, method_name):

|

||||||

|

raise NotImplementedError

|

||||||

|

except ModuleNotFoundError:

|

||||||

|

sys.exit(wording.get('frame_processor_not_loaded').format(frame_processor = frame_processor))

|

||||||

|

except NotImplementedError:

|

||||||

|

sys.exit(wording.get('frame_processor_not_implemented').format(frame_processor = frame_processor))

|

||||||

|

return frame_processor_module

|

||||||

|

|

||||||

|

|

||||||

|

def get_frame_processors_modules(frame_processors : List[str]) -> List[ModuleType]:

|

||||||

|

global FRAME_PROCESSORS_MODULES

|

||||||

|

|

||||||

|

if not FRAME_PROCESSORS_MODULES:

|

||||||

|

for frame_processor in frame_processors:

|

||||||

|

frame_processor_module = load_frame_processor_module(frame_processor)

|

||||||

|

FRAME_PROCESSORS_MODULES.append(frame_processor_module)

|

||||||

|

return FRAME_PROCESSORS_MODULES

|

||||||

|

|

||||||

|

|

||||||

|

def clear_frame_processors_modules() -> None:

|

||||||

|

global FRAME_PROCESSORS_MODULES

|

||||||

|

|

||||||

|

for frame_processor_module in get_frame_processors_modules(facefusion.globals.frame_processors):

|

||||||

|

frame_processor_module.clear_frame_processor()

|

||||||

|

FRAME_PROCESSORS_MODULES = []

|

||||||

|

|

||||||

|

|

||||||

|

def multi_process_frame(source_path : str, temp_frame_paths : List[str], process_frames: Callable[[str, List[str], Any], None], update: Callable[[], None]) -> None:

|

||||||

|

with ThreadPoolExecutor(max_workers = facefusion.globals.execution_thread_count) as executor:

|

||||||

|

futures = []

|

||||||

|

queue = create_queue(temp_frame_paths)

|

||||||

|

queue_per_future = max(len(temp_frame_paths) // facefusion.globals.execution_thread_count * facefusion.globals.execution_queue_count, 1)

|

||||||

|

while not queue.empty():

|

||||||

|

future = executor.submit(process_frames, source_path, pick_queue(queue, queue_per_future), update)

|

||||||

|

futures.append(future)

|

||||||

|

for future in as_completed(futures):

|

||||||

|

future.result()

|

||||||

|

|

||||||

|

|

||||||

|

def create_queue(temp_frame_paths : List[str]) -> Queue[str]:

|

||||||

|

queue: Queue[str] = Queue()

|

||||||

|

for frame_path in temp_frame_paths:

|

||||||

|

queue.put(frame_path)

|

||||||

|

return queue

|

||||||

|

|

||||||

|

|

||||||

|

def pick_queue(queue : Queue[str], queue_per_future : int) -> List[str]:

|

||||||

|

queues = []

|

||||||

|

for _ in range(queue_per_future):

|

||||||

|

if not queue.empty():

|

||||||

|

queues.append(queue.get())

|

||||||

|

return queues

|

||||||

|

|

||||||

|

|

||||||

|

def process_video(source_path : str, frame_paths : List[str], process_frames : Callable[[str, List[str], Any], None]) -> None:

|

||||||

|

progress_bar_format = '{l_bar}{bar}| {n_fmt}/{total_fmt} [{elapsed}<{remaining}, {rate_fmt}{postfix}]'

|

||||||

|

total = len(frame_paths)

|

||||||

|

with tqdm(total = total, desc = wording.get('processing'), unit = 'frame', dynamic_ncols = True, bar_format = progress_bar_format) as progress:

|

||||||

|

multi_process_frame(source_path, frame_paths, process_frames, lambda: update_progress(progress))

|

||||||

|

|

||||||

|

|

||||||

|

def update_progress(progress : Any = None) -> None:

|

||||||

|

process = psutil.Process(os.getpid())

|

||||||

|

memory_usage = process.memory_info().rss / 1024 / 1024 / 1024

|

||||||

|

progress.set_postfix(

|

||||||

|

{

|

||||||

|

'memory_usage': '{:.2f}'.format(memory_usage).zfill(5) + 'GB',

|

||||||

|

'execution_providers': facefusion.globals.execution_providers,

|

||||||

|

'execution_thread_count': facefusion.globals.execution_thread_count,

|

||||||

|

'execution_queue_count': facefusion.globals.execution_queue_count

|

||||||

|

})

|

||||||

|

progress.refresh()

|

||||||

|

progress.update(1)

|

||||||

|

|

||||||

|

|

||||||

|

def get_device() -> str:

|

||||||

|

if 'CUDAExecutionProvider' in facefusion.globals.execution_providers:

|

||||||

|

return 'cuda'

|

||||||

|

if 'CoreMLExecutionProvider' in facefusion.globals.execution_providers:

|

||||||

|

return 'mps'

|

||||||

|

return 'cpu'

|

||||||

0

facefusion/processors/frame/modules/__init__.py

Normal file

0

facefusion/processors/frame/modules/__init__.py

Normal file

100

facefusion/processors/frame/modules/face_enhancer.py

Normal file

100

facefusion/processors/frame/modules/face_enhancer.py

Normal file

@ -0,0 +1,100 @@

|

|||||||

|

from typing import Any, List, Callable

|

||||||

|

import cv2

|

||||||

|

import threading

|

||||||

|

from gfpgan.utils import GFPGANer

|

||||||

|

|

||||||

|

import facefusion.globals

|

||||||

|

import facefusion.processors.frame.core as frame_processors

|

||||||

|

from facefusion import wording

|

||||||

|

from facefusion.core import update_status

|

||||||

|

from facefusion.face_analyser import get_many_faces

|

||||||

|

from facefusion.typing import Frame, Face

|

||||||

|

from facefusion.utilities import conditional_download, resolve_relative_path, is_image, is_video

|

||||||

|

|

||||||

|

FRAME_PROCESSOR = None

|

||||||

|

THREAD_SEMAPHORE = threading.Semaphore()

|

||||||

|

THREAD_LOCK = threading.Lock()

|

||||||

|

NAME = 'FACEFUSION.FRAME_PROCESSOR.FACE_ENHANCER'

|

||||||

|

|

||||||

|

|

||||||

|

def get_frame_processor() -> Any:

|

||||||

|

global FRAME_PROCESSOR

|

||||||

|

|

||||||

|

with THREAD_LOCK:

|

||||||

|

if FRAME_PROCESSOR is None:

|

||||||

|

model_path = resolve_relative_path('../.assets/models/GFPGANv1.4.pth')

|

||||||

|

FRAME_PROCESSOR = GFPGANer(

|

||||||

|

model_path = model_path,

|

||||||

|

upscale = 1,

|

||||||

|

device = frame_processors.get_device()

|

||||||

|

)

|

||||||

|

return FRAME_PROCESSOR

|

||||||

|

|

||||||

|

|

||||||

|

def clear_frame_processor() -> None:

|

||||||

|

global FRAME_PROCESSOR

|

||||||

|

|

||||||

|

FRAME_PROCESSOR = None

|

||||||

|

|

||||||

|

|

||||||

|

def pre_check() -> bool:

|

||||||

|

download_directory_path = resolve_relative_path('../.assets/models')

|

||||||

|

conditional_download(download_directory_path, ['https://huggingface.co/facefusion/models/resolve/main/GFPGANv1.4.pth'])

|

||||||

|

return True

|

||||||

|

|

||||||

|

|

||||||

|

def pre_process() -> bool:

|

||||||

|

if not is_image(facefusion.globals.target_path) and not is_video(facefusion.globals.target_path):

|

||||||

|

update_status(wording.get('select_image_or_video_target') + wording.get('exclamation_mark'), NAME)

|

||||||

|

return False

|

||||||

|

return True

|

||||||

|

|

||||||

|

|

||||||

|

def post_process() -> None:

|

||||||

|

clear_frame_processor()

|

||||||

|

|

||||||

|

|

||||||

|

def enhance_face(target_face : Face, temp_frame : Frame) -> Frame:

|

||||||

|

start_x, start_y, end_x, end_y = map(int, target_face['bbox'])

|

||||||

|

padding_x = int((end_x - start_x) * 0.5)

|

||||||

|

padding_y = int((end_y - start_y) * 0.5)

|

||||||

|

start_x = max(0, start_x - padding_x)

|

||||||

|

start_y = max(0, start_y - padding_y)

|

||||||

|

end_x = max(0, end_x + padding_x)

|

||||||

|

end_y = max(0, end_y + padding_y)

|

||||||

|

crop_frame = temp_frame[start_y:end_y, start_x:end_x]

|

||||||

|

if crop_frame.size:

|

||||||

|

with THREAD_SEMAPHORE:

|

||||||

|

_, _, crop_frame = get_frame_processor().enhance(

|

||||||

|

crop_frame,

|

||||||

|

paste_back = True

|

||||||

|

)

|

||||||

|

temp_frame[start_y:end_y, start_x:end_x] = crop_frame

|

||||||

|

return temp_frame

|

||||||

|

|

||||||

|

|

||||||

|

def process_frame(source_face : Face, reference_face : Face, temp_frame : Frame) -> Frame:

|

||||||

|

many_faces = get_many_faces(temp_frame)

|

||||||

|

if many_faces:

|

||||||

|

for target_face in many_faces:

|

||||||

|

temp_frame = enhance_face(target_face, temp_frame)

|

||||||

|

return temp_frame

|

||||||

|

|

||||||

|

|

||||||

|

def process_frames(source_path : str, temp_frame_paths : List[str], update: Callable[[], None]) -> None:

|

||||||

|

for temp_frame_path in temp_frame_paths:

|

||||||

|

temp_frame = cv2.imread(temp_frame_path)

|

||||||

|

result_frame = process_frame(None, None, temp_frame)

|

||||||

|

cv2.imwrite(temp_frame_path, result_frame)

|

||||||

|

if update:

|

||||||

|

update()

|

||||||

|

|

||||||

|

|

||||||

|

def process_image(source_path : str, target_path : str, output_path : str) -> None:

|

||||||

|

target_frame = cv2.imread(target_path)

|

||||||

|

result_frame = process_frame(None, None, target_frame)

|

||||||

|

cv2.imwrite(output_path, result_frame)

|

||||||

|

|

||||||

|

|

||||||

|

def process_video(source_path : str, temp_frame_paths : List[str]) -> None:

|

||||||

|

facefusion.processors.frame.core.process_video(None, temp_frame_paths, process_frames)

|

||||||

105

facefusion/processors/frame/modules/face_swapper.py

Normal file

105

facefusion/processors/frame/modules/face_swapper.py

Normal file

@ -0,0 +1,105 @@

|

|||||||

|

from typing import Any, List, Callable

|

||||||

|

import cv2

|

||||||

|

import insightface

|

||||||

|

import threading

|

||||||

|

|

||||||

|

import facefusion.globals

|

||||||

|

import facefusion.processors.frame.core as frame_processors

|

||||||

|

from facefusion import wording

|

||||||

|

from facefusion.core import update_status

|

||||||

|

from facefusion.face_analyser import get_one_face, get_many_faces, find_similar_faces

|

||||||

|

from facefusion.face_reference import get_face_reference, set_face_reference

|

||||||

|

from facefusion.typing import Face, Frame

|

||||||

|

from facefusion.utilities import conditional_download, resolve_relative_path, is_image, is_video

|

||||||

|

|

||||||

|

FRAME_PROCESSOR = None

|

||||||

|

THREAD_LOCK = threading.Lock()

|

||||||

|

NAME = 'FACEFUSION.FRAME_PROCESSOR.FACE_SWAPPER'

|

||||||

|

|

||||||

|

|

||||||

|

def get_frame_processor() -> Any:

|

||||||

|

global FRAME_PROCESSOR

|

||||||

|

|

||||||

|

with THREAD_LOCK:

|

||||||

|

if FRAME_PROCESSOR is None:

|

||||||

|

model_path = resolve_relative_path('../.assets/models/inswapper_128.onnx')

|

||||||

|

FRAME_PROCESSOR = insightface.model_zoo.get_model(model_path, providers = facefusion.globals.execution_providers)

|

||||||

|

return FRAME_PROCESSOR

|

||||||

|

|

||||||

|

|

||||||

|

def clear_frame_processor() -> None:

|

||||||

|

global FRAME_PROCESSOR

|

||||||

|

|

||||||

|

FRAME_PROCESSOR = None

|

||||||

|

|

||||||

|

|

||||||

|

def pre_check() -> bool:

|

||||||

|

download_directory_path = resolve_relative_path('../.assets/models')

|

||||||

|

conditional_download(download_directory_path, ['https://huggingface.co/facefusion/models/resolve/main/inswapper_128.onnx'])

|

||||||

|

return True

|

||||||

|

|

||||||

|

|

||||||

|

def pre_process() -> bool:

|

||||||

|

if not is_image(facefusion.globals.source_path):

|

||||||

|

update_status(wording.get('select_image_source') + wording.get('exclamation_mark'), NAME)

|

||||||

|

return False

|

||||||

|

elif not get_one_face(cv2.imread(facefusion.globals.source_path)):

|

||||||

|

update_status(wording.get('no_source_face_detected') + wording.get('exclamation_mark'), NAME)

|

||||||

|

return False

|

||||||

|

if not is_image(facefusion.globals.target_path) and not is_video(facefusion.globals.target_path):

|

||||||

|

update_status(wording.get('select_image_or_video_target') + wording.get('exclamation_mark'), NAME)

|

||||||

|

return False

|

||||||

|

return True

|

||||||

|

|

||||||

|

|

||||||

|

def post_process() -> None:

|

||||||

|

clear_frame_processor()

|

||||||

|

|

||||||

|

|

||||||

|

def swap_face(source_face : Face, target_face : Face, temp_frame : Frame) -> Frame:

|

||||||

|

return get_frame_processor().get(temp_frame, target_face, source_face, paste_back = True)

|

||||||

|

|

||||||

|

|

||||||

|

def process_frame(source_face : Face, reference_face : Face, temp_frame : Frame) -> Frame:

|

||||||

|

if 'reference' in facefusion.globals.face_recognition:

|

||||||

|

similar_faces = find_similar_faces(temp_frame, reference_face, facefusion.globals.reference_face_distance)

|

||||||

|

if similar_faces:

|

||||||

|

for similar_face in similar_faces:

|

||||||

|

temp_frame = swap_face(source_face, similar_face, temp_frame)

|

||||||

|

if 'many' in facefusion.globals.face_recognition:

|

||||||

|

many_faces = get_many_faces(temp_frame)

|

||||||

|

if many_faces:

|

||||||

|

for target_face in many_faces:

|

||||||

|

temp_frame = swap_face(source_face, target_face, temp_frame)

|

||||||

|

return temp_frame

|

||||||

|

|

||||||

|

|

||||||

|

def process_frames(source_path : str, temp_frame_paths : List[str], update: Callable[[], None]) -> None:

|

||||||

|

source_face = get_one_face(cv2.imread(source_path))

|

||||||

|

reference_face = get_face_reference() if 'reference' in facefusion.globals.face_recognition else None

|

||||||

|

for temp_frame_path in temp_frame_paths:

|

||||||

|

temp_frame = cv2.imread(temp_frame_path)

|

||||||

|

result_frame = process_frame(source_face, reference_face, temp_frame)

|

||||||

|

cv2.imwrite(temp_frame_path, result_frame)

|

||||||

|

if update:

|

||||||

|

update()

|

||||||

|

|

||||||

|

|

||||||

|

def process_image(source_path : str, target_path : str, output_path : str) -> None:

|

||||||

|

source_face = get_one_face(cv2.imread(source_path))

|

||||||

|

target_frame = cv2.imread(target_path)

|

||||||

|

reference_face = get_one_face(target_frame, facefusion.globals.reference_face_position) if 'reference' in facefusion.globals.face_recognition else None

|

||||||

|

result_frame = process_frame(source_face, reference_face, target_frame)

|

||||||

|

cv2.imwrite(output_path, result_frame)

|

||||||

|

|

||||||

|

|

||||||

|

def process_video(source_path : str, temp_frame_paths : List[str]) -> None:

|

||||||

|

conditional_set_face_reference(temp_frame_paths)

|

||||||

|

frame_processors.process_video(source_path, temp_frame_paths, process_frames)

|

||||||

|

|

||||||

|

|

||||||

|

def conditional_set_face_reference(temp_frame_paths : List[str]) -> None:

|

||||||

|

if 'reference' in facefusion.globals.face_recognition and not get_face_reference():

|

||||||

|

reference_frame = cv2.imread(temp_frame_paths[facefusion.globals.reference_frame_number])

|

||||||

|

reference_face = get_one_face(reference_frame, facefusion.globals.reference_face_position)

|

||||||

|

set_face_reference(reference_face)

|

||||||

88

facefusion/processors/frame/modules/frame_enhancer.py

Normal file

88

facefusion/processors/frame/modules/frame_enhancer.py

Normal file

@ -0,0 +1,88 @@

|

|||||||

|

from typing import Any, List, Callable

|

||||||

|

import cv2

|

||||||

|

import threading

|

||||||

|

from basicsr.archs.rrdbnet_arch import RRDBNet

|

||||||

|

from realesrgan import RealESRGANer

|

||||||

|

|

||||||

|

import facefusion.processors.frame.core as frame_processors

|

||||||

|

from facefusion.typing import Frame, Face

|

||||||

|

from facefusion.utilities import conditional_download, resolve_relative_path

|

||||||

|

|

||||||

|

FRAME_PROCESSOR = None

|

||||||

|

THREAD_SEMAPHORE = threading.Semaphore()

|

||||||

|

THREAD_LOCK = threading.Lock()

|

||||||

|

NAME = 'FACEFUSION.FRAME_PROCESSOR.FRAME_ENHANCER'

|

||||||

|

|

||||||

|

|

||||||

|

def get_frame_processor() -> Any:

|

||||||

|

global FRAME_PROCESSOR

|

||||||

|

|

||||||

|

with THREAD_LOCK:

|

||||||

|

if FRAME_PROCESSOR is None:

|

||||||

|

model_path = resolve_relative_path('../.assets/models/RealESRGAN_x4plus.pth')

|

||||||

|

FRAME_PROCESSOR = RealESRGANer(

|

||||||

|

model_path = model_path,

|

||||||

|

model = RRDBNet(

|

||||||

|

num_in_ch = 3,

|

||||||

|

num_out_ch = 3,

|

||||||

|

num_feat = 64,

|

||||||

|

num_block = 23,

|

||||||

|

num_grow_ch = 32,

|

||||||

|

scale = 4

|

||||||

|

),

|

||||||

|

device = frame_processors.get_device(),

|

||||||

|

tile = 512,

|

||||||

|

tile_pad = 32,

|

||||||

|

pre_pad = 0,

|

||||||

|

scale = 4

|

||||||

|

)

|

||||||

|

return FRAME_PROCESSOR

|

||||||

|

|

||||||

|

|

||||||

|

def clear_frame_processor() -> None:

|

||||||

|

global FRAME_PROCESSOR

|

||||||

|

|

||||||

|

FRAME_PROCESSOR = None

|

||||||

|

|

||||||

|

|

||||||

|

def pre_check() -> bool:

|

||||||

|

download_directory_path = resolve_relative_path('../.assets/models')

|

||||||

|

conditional_download(download_directory_path, ['https://huggingface.co/facefusion/models/resolve/main/RealESRGAN_x4plus.pth'])

|

||||||

|

return True

|

||||||

|

|

||||||

|

|

||||||

|

def pre_process() -> bool:

|

||||||

|

return True

|

||||||

|

|

||||||

|

|

||||||

|

def post_process() -> None:

|

||||||

|

clear_frame_processor()

|

||||||

|

|

||||||

|

|

||||||

|

def enhance_frame(temp_frame : Frame) -> Frame:

|

||||||

|

with THREAD_SEMAPHORE:

|

||||||

|

temp_frame, _ = get_frame_processor().enhance(temp_frame, outscale = 1)

|

||||||

|

return temp_frame

|

||||||

|

|

||||||

|

|

||||||

|

def process_frame(source_face : Face, reference_face : Face, temp_frame : Frame) -> Frame:

|

||||||

|

return enhance_frame(temp_frame)

|

||||||

|

|

||||||

|

|

||||||

|

def process_frames(source_path : str, temp_frame_paths : List[str], update: Callable[[], None]) -> None:

|

||||||

|

for temp_frame_path in temp_frame_paths:

|

||||||

|

temp_frame = cv2.imread(temp_frame_path)

|

||||||

|

result_frame = process_frame(None, None, temp_frame)

|

||||||

|

cv2.imwrite(temp_frame_path, result_frame)

|

||||||

|

if update:

|

||||||

|

update()

|

||||||

|

|

||||||

|

|

||||||

|

def process_image(source_path : str, target_path : str, output_path : str) -> None:

|

||||||

|

target_frame = cv2.imread(target_path)

|

||||||

|