* Cosmetic changes * Cosmetic changes * Run single warm up for the benchmark suite * Use latest version of Gradio * More testing * Introduce basic installer * Fix typo * Move more to installer file * Fix the installer with the uninstall all trick * Adjust wording * Fix coreml in installer * Allow Pyhton 3.9 * Add VENV to installer * Just some cosmetics * Just some cosmetics * Dedicated headless mode, Refine API of UI layouts * Use --headless for pytest * Fix testing for Windows * Normalize output path that lacks extension * Fix CI for Windows * Fix CI for Windows * UI to change output path * Add conda support for the installer * Improve installer quite a bit * Drop conda support * Install community wheels for coreml silicon * Improve output video component * Fix silicon wheel downloading * Remove venv from installer as we cannot activate via subprocess * Use join to create wheel name * Refine the output path normalization * Refine the output path normalization * Introduce ProcessMode and rename some methods * Introduce ProcessMode and rename some methods * Basic webcam integration and open_ffmpeg() * Basic webcam integration part2 * Benchmark resolutions now selectable * Rename benchmark resolution back to benchmark runs * Fix repeating output path in UI * Keep output_path untouched if not resolvable * Add more cases to normalize output path * None for those tests that don't take source path into account * Finish basic webcam integration, UI layout now with custom run() * Fix CI and hide link in webcam UI * Cosmetics on webcam UI * Move get_device to utilities * Fix CI * Introduce output-image-quality, Show and hide UI according to target media type * Benchmark with partial result updates * fix: trim frame sliders not appearing after draggin video * fix: output and temp frame setting inputs not appearing * Fix: set increased update delay to 250ms to let Gradio update conditional inputs properly * Reverted .gitignore * Adjust timings * Remove timeout hacks and get fully event driven * Update dependencies * Update dependencies * Revert NSFW library, Conditional unset trim args * Face selector works better on preview slider release * Add limit resources to UI * Introduce vision.py for all CV2 operations, Rename some methods * Add restoring audio failed * Decouple updates for preview image and preview frame slider, Move reduce_preview_frame to vision * Refactor detect_fps based on JSON output * Only webcam when open * More conditions to vision.py * Add udp and v4l2 streaming to webcam UI * Detect v4l2 device to be used * Refactor code a bit * Use static max memory for UI * Fix CI * Looks stable to me * Update preview * Update preview --------- Co-authored-by: Sumit <vizsumit@gmail.com> |

||

|---|---|---|

| .github | ||

| facefusion | ||

| tests | ||

| .editorconfig | ||

| .flake8 | ||

| .gitignore | ||

| install.py | ||

| mypy.ini | ||

| README.md | ||

| requirements-ci.txt | ||

| requirements.txt | ||

| run.py | ||

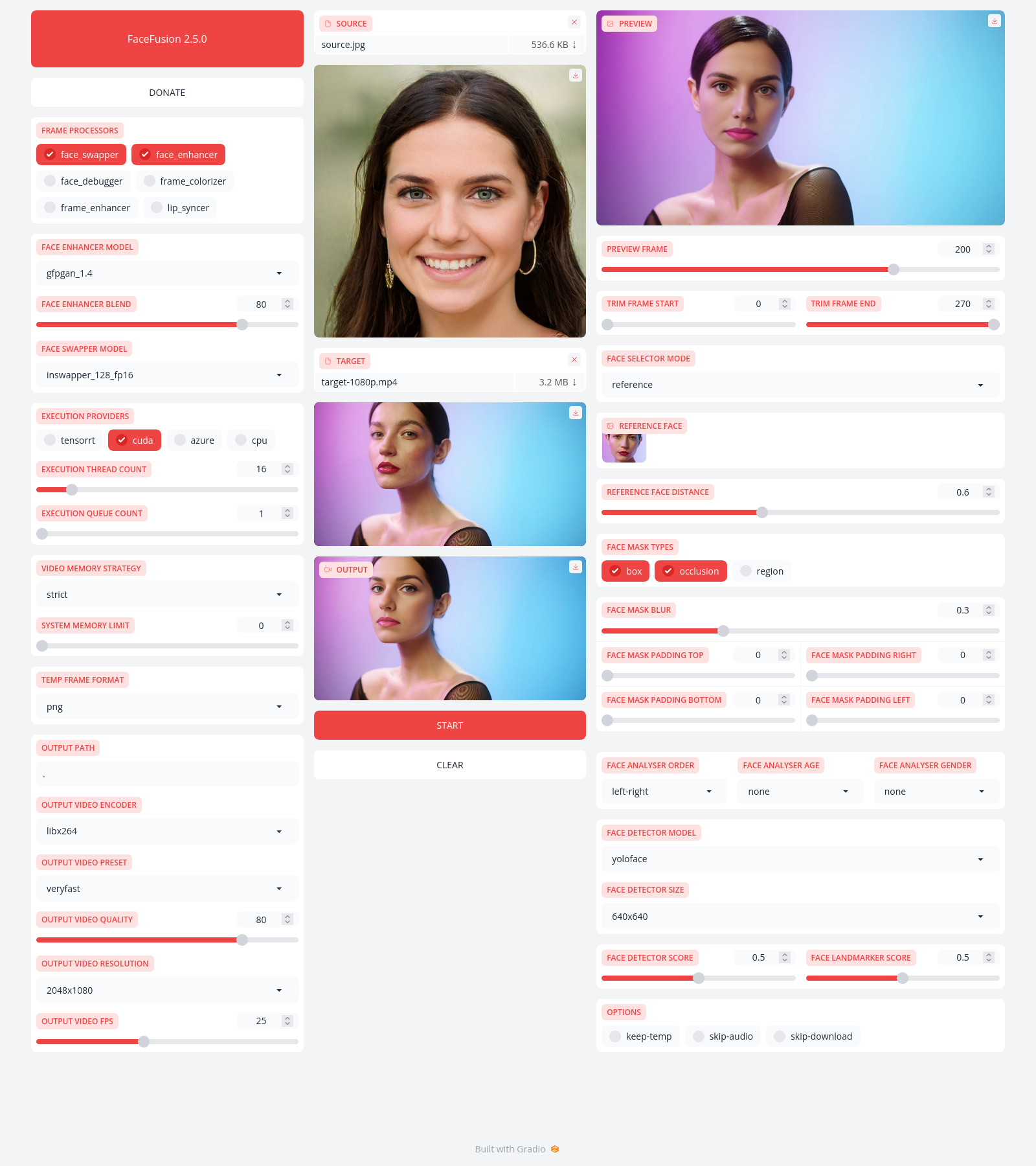

FaceFusion

Next generation face swapper and enhancer.

Preview

Installation

Be aware, the installation needs technical skills and is not for beginners. Please do not open platform and installation related issues on GitHub. We have a very helpful Discord community that will guide you to install FaceFusion.

Read the installation now.

Usage

Run the program as needed.

python run.py [options]

-h, --help show this help message and exit

-s SOURCE_PATH, --source SOURCE_PATH select a source image

-t TARGET_PATH, --target TARGET_PATH select a target image or video

-o OUTPUT_PATH, --output OUTPUT_PATH specify the output file or directory

--frame-processors FRAME_PROCESSORS [FRAME_PROCESSORS ...] choose from the available frame processors (choices: face_enhancer, face_swapper, frame_enhancer, ...)

--ui-layouts UI_LAYOUTS [UI_LAYOUTS ...] choose from the available ui layouts (choices: benchmark, webcam, default, ...)

--keep-fps preserve the frames per second (fps) of the target

--keep-temp retain temporary frames after processing

--skip-audio omit audio from the target

--face-recognition {reference,many} specify the method for face recognition

--face-analyser-direction {left-right,right-left,top-bottom,bottom-top,small-large,large-small} specify the direction used for face analysis

--face-analyser-age {child,teen,adult,senior} specify the age used for face analysis

--face-analyser-gender {male,female} specify the gender used for face analysis

--reference-face-position REFERENCE_FACE_POSITION specify the position of the reference face

--reference-face-distance REFERENCE_FACE_DISTANCE specify the distance between the reference face and the target face

--reference-frame-number REFERENCE_FRAME_NUMBER specify the number of the reference frame

--trim-frame-start TRIM_FRAME_START specify the start frame for extraction

--trim-frame-end TRIM_FRAME_END specify the end frame for extraction

--temp-frame-format {jpg,png} specify the image format used for frame extraction

--temp-frame-quality [0-100] specify the image quality used for frame extraction

--output-image-quality [0-100] specify the quality used for the output image

--output-video-encoder {libx264,libx265,libvpx-vp9,h264_nvenc,hevc_nvenc} specify the encoder used for the output video

--output-video-quality [0-100] specify the quality used for the output video

--max-memory MAX_MEMORY specify the maximum amount of ram to be used (in gb)

--execution-providers {cpu} [{cpu} ...] choose from the available execution providers (choices: cpu, ...)

--execution-thread-count EXECUTION_THREAD_COUNT specify the number of execution threads

--execution-queue-count EXECUTION_QUEUE_COUNT specify the number of execution queries

--headless run the program in headless mode

-v, --version show program's version number and exit

Disclaimer

This software is meant to be a productive contribution to the rapidly growing AI-generated media industry. It will help artists with tasks such as animating a custom character or using the character as a model for clothing etc.

The developers of this software are aware of its possible unethical applications and are committed to take preventative measures against them. It has a built-in check which prevents the program from working on inappropriate media including but not limited to nudity, graphic content, sensitive material such as war footage etc. We will continue to develop this project in the positive direction while adhering to law and ethics. This project may be shut down or include watermarks on the output if requested by law.

Users of this software are expected to use this software responsibly while abiding the local law. If face of a real person is being used, users are suggested to get consent from the concerned person and clearly mention that it is a deepfake when posting content online. Developers of this software will not be responsible for actions of end-users.

Documentation

Read the documentation for a deep dive.