* renaming and restructuring (#282) * Renaming and restructuring * Renaming and restructuring * Renaming and restructuring * Fix gender detection * Implement distance to face debugger * Implement distance to face debugger part2 * Implement distance to face debugger part3 * Mark as next * Fix reference when face_debugger comes first * Use official onnxruntime nightly * CUDA on steroids * CUDA on steroids * Add some testing * Set inswapper_128_fp16 as default * Feat/block until post check (#292) * Block until download is done * Introduce post_check() * Fix webcam * Update dependencies * Add --force-reinstall to installer * Introduce config ini (#298) * Introduce config ini * Fix output video encoder * Revert help listings back to commas, Move SSL hack to download.py * Introduce output-video-preset which defaults to veryfast * Mapping for nvenc encoders * Rework on events and non-blocking UI * Add fast bmp to temp_frame_formats * Add fast bmp to temp_frame_formats * Show total processing time on success * Show total processing time on success * Show total processing time on success * Move are_images, is_image and is_video back to filesystem * Fix some spacings * Pissing everyone of by renaming stuff * Fix seconds output * feat/video output fps (#312) * added output fps slider, removed 'keep fps' option (#311) * added output fps slider, removed 'keep fps' option * now uses passed fps instead of global fps for ffmpeg * fps values are now floats instead of ints * fix previous commit * removed default value from fps slider this is so we can implement a dynamic default value later * Fix seconds output * Some cleanup --------- Co-authored-by: Ran Shaashua <47498956+ranshaa05@users.noreply.github.com> * Allow 0.01 steps for fps * Make fps unregulated * Make fps unregulated * Remove distance from face debugger again (does not work) * Fix gender age * Fix gender age * Hotfix benchmark suite * Warp face normalize (#313) * use normalized kp templates * Update face_helper.py * My 50 cents to warp_face() --------- Co-authored-by: Harisreedhar <46858047+harisreedhar@users.noreply.github.com> * face-swapper-weight (#315) * Move prepare_crop_frame and normalize_crop_frame out of apply_swap * Fix UI bug with different range * feat/output video resolution (#316) * Introduce detect_video_resolution, Rename detect_fps to detect_video_fps * Add calc_video_resolution_range * Make output resolution work, does not auto-select yet * Make output resolution work, does not auto-select yet * Try to keep the origin resolution * Split code into more fragments * Add pack/unpack resolution * Move video_template_sizes to choices * Improve create_video_resolutions * Reword benchmark suite * Optimal speed for benchmark * Introduce different video memory strategies, rename max_memory to max… (#317) * Introduce different video memory strategies, rename max_memory to max_system_memory * Update readme * Fix limit_system_memory call * Apply video_memory_strategy to face debugger * Limit face swapper weight to 3.0 * Remove face swapper weight due bad render outputs * Show/dide logic for output video preset * fix uint8 conversion * Fix whitespace * Finalize layout and update preview * Fix multi renders on face debugger * Restore less restrictive rendering of preview and stream * Fix block mode for model downloads * Add testing * Cosmetic changes * Enforce valid fps and resolution via CLI * Empty config * Cosmetics on args processing * Memory workover (#319) * Cosmetics on args processing * Fix for MacOS * Rename all max_ to _limit * More fixes * Update preview * Fix whitespace --------- Co-authored-by: Ran Shaashua <47498956+ranshaa05@users.noreply.github.com> Co-authored-by: Harisreedhar <46858047+harisreedhar@users.noreply.github.com>

107 lines

8.8 KiB

Markdown

107 lines

8.8 KiB

Markdown

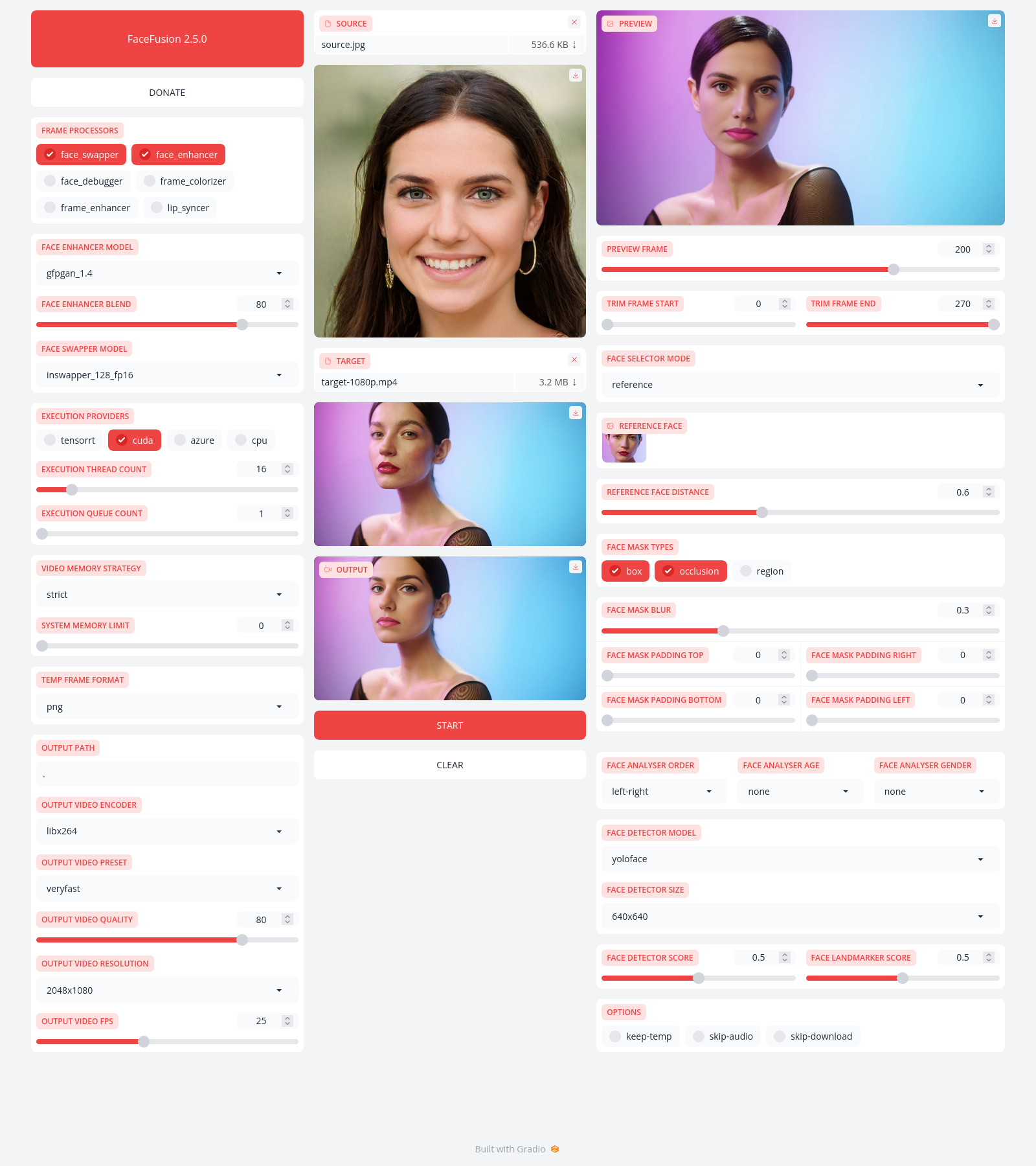

FaceFusion

|

|

==========

|

|

|

|

> Next generation face swapper and enhancer.

|

|

|

|

[](https://github.com/facefusion/facefusion/actions?query=workflow:ci)

|

|

|

|

|

|

|

|

Preview

|

|

-------

|

|

|

|

|

|

|

|

|

|

Installation

|

|

------------

|

|

|

|

Be aware, the installation needs technical skills and is not for beginners. Please do not open platform and installation related issues on GitHub. We have a very helpful [Discord](https://join.facefusion.io) community that will guide you to complete the installation.

|

|

|

|

Get started with the [installation](https://docs.facefusion.io/installation) guide.

|

|

|

|

|

|

Usage

|

|

-----

|

|

|

|

Run the command:

|

|

|

|

```

|

|

python run.py [options]

|

|

|

|

options:

|

|

-h, --help show this help message and exit

|

|

-s SOURCE_PATHS, --source SOURCE_PATHS select a source image

|

|

-t TARGET_PATH, --target TARGET_PATH select a target image or video

|

|

-o OUTPUT_PATH, --output OUTPUT_PATH specify the output file or directory

|

|

-v, --version show program's version number and exit

|

|

|

|

misc:

|

|

--skip-download omit automate downloads and lookups

|

|

--headless run the program in headless mode

|

|

--log-level {error,warn,info,debug} choose from the available log levels

|

|

|

|

execution:

|

|

--execution-providers EXECUTION_PROVIDERS [EXECUTION_PROVIDERS ...] choose from the available execution providers (choices: cpu, ...)

|

|

--execution-thread-count [1-128] specify the number of execution threads

|

|

--execution-queue-count [1-32] specify the number of execution queries

|

|

|

|

memory:

|

|

--video-memory-strategy {strict,moderate,tolerant} specify strategy to handle the video memory

|

|

--system-memory-limit [0-128] specify the amount (gb) of system memory to be used

|

|

|

|

face analyser:

|

|

--face-analyser-order {left-right,right-left,top-bottom,bottom-top,small-large,large-small,best-worst,worst-best} specify the order used for the face analyser

|

|

--face-analyser-age {child,teen,adult,senior} specify the age used for the face analyser

|

|

--face-analyser-gender {male,female} specify the gender used for the face analyser

|

|

--face-detector-model {retinaface,yunet} specify the model used for the face detector

|

|

--face-detector-size {160x160,320x320,480x480,512x512,640x640,768x768,960x960,1024x1024} specify the size threshold used for the face detector

|

|

--face-detector-score [0.0-1.0] specify the score threshold used for the face detector

|

|

|

|

face selector:

|

|

--face-selector-mode {reference,one,many} specify the mode for the face selector

|

|

--reference-face-position REFERENCE_FACE_POSITION specify the position of the reference face

|

|

--reference-face-distance [0.0-1.5] specify the distance between the reference face and the target face

|

|

--reference-frame-number REFERENCE_FRAME_NUMBER specify the number of the reference frame

|

|

|

|

face mask:

|

|

--face-mask-types FACE_MASK_TYPES [FACE_MASK_TYPES ...] choose from the available face mask types (choices: box, occlusion, region)

|

|

--face-mask-blur [0.0-1.0] specify the blur amount for face mask

|

|

--face-mask-padding FACE_MASK_PADDING [FACE_MASK_PADDING ...] specify the face mask padding (top, right, bottom, left) in percent

|

|

--face-mask-regions FACE_MASK_REGIONS [FACE_MASK_REGIONS ...] choose from the available face mask regions (choices: skin, left-eyebrow, right-eyebrow, left-eye, right-eye, eye-glasses, nose, mouth, upper-lip, lower-lip)

|

|

|

|

frame extraction:

|

|

--trim-frame-start TRIM_FRAME_START specify the start frame for extraction

|

|

--trim-frame-end TRIM_FRAME_END specify the end frame for extraction

|

|

--temp-frame-format {jpg,png,bmp} specify the image format used for frame extraction

|

|

--temp-frame-quality [0-100] specify the image quality used for frame extraction

|

|

--keep-temp retain temporary frames after processing

|

|

|

|

output creation:

|

|

--output-image-quality [0-100] specify the quality used for the output image

|

|

--output-video-encoder {libx264,libx265,libvpx-vp9,h264_nvenc,hevc_nvenc} specify the encoder used for the output video

|

|

--output-video-preset {ultrafast,superfast,veryfast,faster,fast,medium,slow,slower,veryslow} specify the preset used for the output video

|

|

--output-video-quality [0-100] specify the quality used for the output video

|

|

--output-video-resolution OUTPUT_VIDEO_RESOLUTION specify the resolution used for the output video

|

|

--output-video-fps OUTPUT_VIDEO_FPS specify the frames per second (fps) used for the output video

|

|

--skip-audio omit audio from the target

|

|

|

|

frame processors:

|

|

--frame-processors FRAME_PROCESSORS [FRAME_PROCESSORS ...] choose from the available frame processors (choices: face_debugger, face_enhancer, face_swapper, frame_enhancer, ...)

|

|

--face-debugger-items FACE_DEBUGGER_ITEMS [FACE_DEBUGGER_ITEMS ...] specify the face debugger items (choices: bbox, kps, face-mask, score)

|

|

--face-enhancer-model {codeformer,gfpgan_1.2,gfpgan_1.3,gfpgan_1.4,gpen_bfr_256,gpen_bfr_512,restoreformer} choose the model for the frame processor

|

|

--face-enhancer-blend [0-100] specify the blend amount for the frame processor

|

|

--face-swapper-model {blendswap_256,inswapper_128,inswapper_128_fp16,simswap_256,simswap_512_unofficial} choose the model for the frame processor

|

|

--frame-enhancer-model {real_esrgan_x2plus,real_esrgan_x4plus,real_esrnet_x4plus} choose the model for the frame processor

|

|

--frame-enhancer-blend [0-100] specify the blend amount for the frame processor

|

|

|

|

uis:

|

|

--ui-layouts UI_LAYOUTS [UI_LAYOUTS ...] choose from the available ui layouts (choices: benchmark, webcam, default, ...)

|

|

```

|

|

|

|

|

|

Documentation

|

|

-------------

|

|

|

|

Read the [documentation](https://docs.facefusion.io) for a deep dive.

|