* Operating system specific installer options * Update dependencies * Sorting before NMS according to the standard * Minor typing fix * Change the wording * Update preview.py (#222) Added a release listener to the preview frame slider, this will update the frame preview with the latest frame * Combine preview slider listener * Remove change listener * Introduce multi source (#223) * Implement multi source * Adjust face enhancer and face debugger to multi source * Implement multi source to UI * Implement multi source to UI part2 * Implement multi source to UI part3 * Implement multi source to UI part4 * Some cleanup * Add face occluder (#225) (#226) * Add face occluder (#225) * add face-occluder (commandline only) * review 1 * Update face_masker.py * Update face_masker.py * Add gui & fix typing * Minor naming cleanup * Minor naming cleanup part2 --------- Co-authored-by: Harisreedhar <46858047+harisreedhar@users.noreply.github.com> * Update usage information * Fix averaged normed_embedding * Remove blur from face occluder, enable accelerators * Switch to RANSAC with 100 threshold * Update face_enhancer.py (#229) * Update face_debugger.py (#230) * Split utilities (#232) * Split utilities * Split utilities part2 * Split utilities part3 * Split utilities part4 * Some cleanup * Implement log level support (#233) * Implement log level support * Fix testing * Implement debug logger * Implement debug logger * Fix alignment offset (#235) * Update face_helper.py * fix 2 * Enforce virtual environment via installer * Enforce virtual environment via installer * Enforce virtual environment via installer * Enforce virtual environment via installer * Feat/multi process reference faces (#239) * Multi processing aware reference faces * First clean up and joining of files * Finalize the face store * Reduce similar face detection to one set, use __name__ for scopes in logger * Rename to face_occluder * Introduce ModelSet type * Improve webcam error handling * Prevent null pointer on is_image() and is_video() * Prevent null pointer on is_image() and is_video() * Fix find similar faces * Fix find similar faces * Fix process_images for face enhancer * Bunch of minor improvements * onnxruntime for ROCM under linux * Improve mask related naming * Fix falsy import * Fix typo * Feat/face parser refactoring (#247) * Face parser update (#244) * face-parser * Update face_masker.py * update debugger * Update globals.py * Update face_masker.py * Refactor code to split occlusion from region * fix (#246) * fix * fix debugger resolution * flip input to horizontal * Clean up UI * Reduce the regions to inside face only * Reduce the regions to inside face only --------- Co-authored-by: Harisreedhar <46858047+harisreedhar@users.noreply.github.com> * Fix enhancer, remove useless dest in add_argument() * Prevent unselect of the face_mask_regions via UI * Prepare next release * Shorten arguments that have choices and nargs * Add missing clear to face debugger --------- Co-authored-by: Mathias <github@feroc.de> Co-authored-by: Harisreedhar <46858047+harisreedhar@users.noreply.github.com>

102 lines

8.3 KiB

Markdown

102 lines

8.3 KiB

Markdown

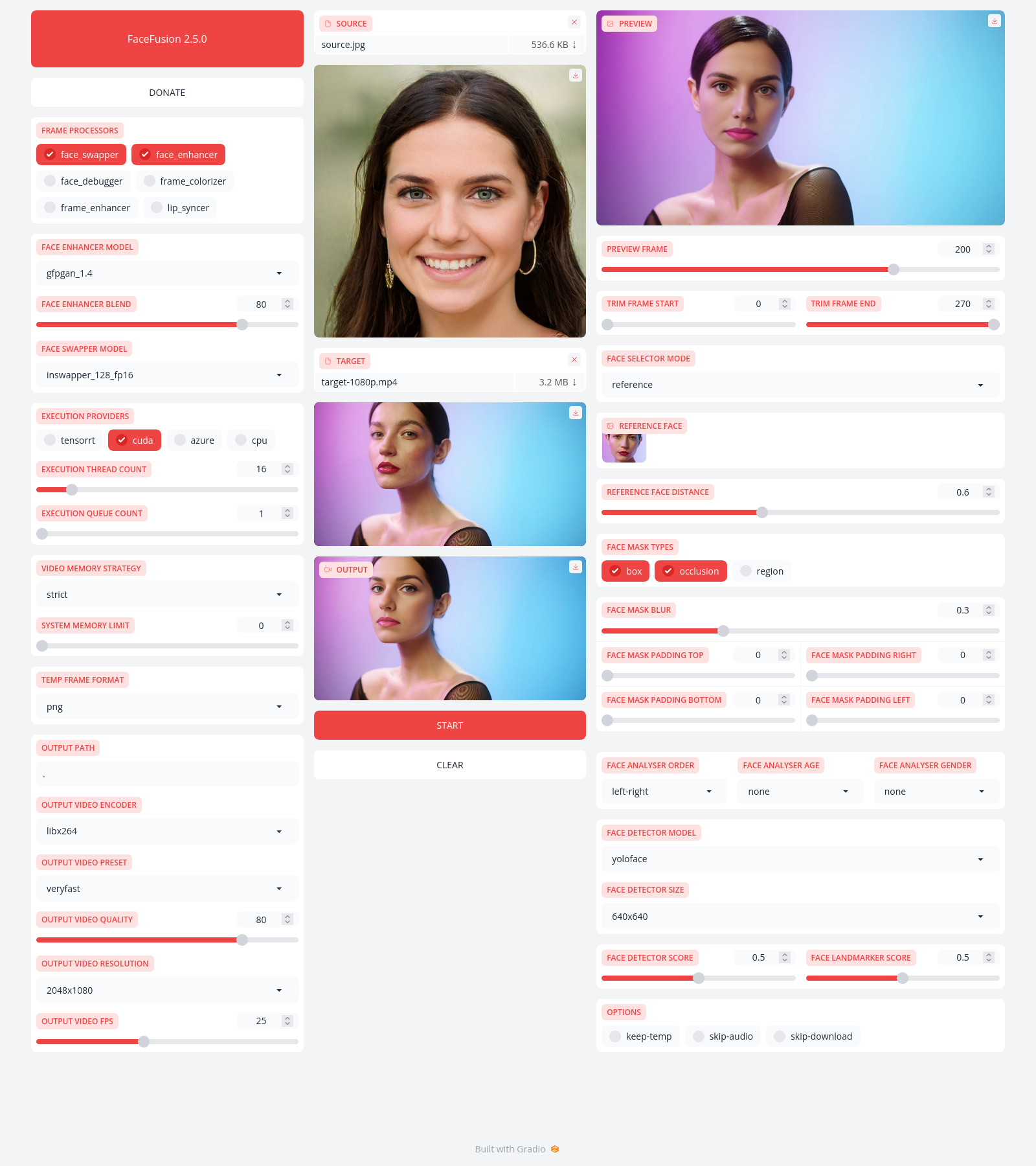

FaceFusion

|

|

==========

|

|

|

|

> Next generation face swapper and enhancer.

|

|

|

|

[](https://github.com/facefusion/facefusion/actions?query=workflow:ci)

|

|

|

|

|

|

|

|

Preview

|

|

-------

|

|

|

|

|

|

|

|

|

|

Installation

|

|

------------

|

|

|

|

Be aware, the installation needs technical skills and is not for beginners. Please do not open platform and installation related issues on GitHub. We have a very helpful [Discord](https://join.facefusion.io) community that will guide you to complete the installation.

|

|

|

|

Get started with the [installation](https://docs.facefusion.io/installation) guide.

|

|

|

|

|

|

Usage

|

|

-----

|

|

|

|

Run the command:

|

|

|

|

```

|

|

python run.py [options]

|

|

|

|

options:

|

|

-h, --help show this help message and exit

|

|

-s SOURCE_PATHS, --source SOURCE_PATHS select a source image

|

|

-t TARGET_PATH, --target TARGET_PATH select a target image or video

|

|

-o OUTPUT_PATH, --output OUTPUT_PATH specify the output file or directory

|

|

-v, --version show program's version number and exit

|

|

|

|

misc:

|

|

--skip-download omit automate downloads and lookups

|

|

--headless run the program in headless mode

|

|

--log-level {error,warn,info,debug} choose from the available log levels

|

|

|

|

execution:

|

|

--execution-providers EXECUTION_PROVIDERS [EXECUTION_PROVIDERS ...] choose from the available execution providers (choices: cpu, ...)

|

|

--execution-thread-count [1-128] specify the number of execution threads

|

|

--execution-queue-count [1-32] specify the number of execution queries

|

|

--max-memory [0-128] specify the maximum amount of ram to be used (in gb)

|

|

|

|

face analyser:

|

|

--face-analyser-order {left-right,right-left,top-bottom,bottom-top,small-large,large-small,best-worst,worst-best} specify the order used for the face analyser

|

|

--face-analyser-age {child,teen,adult,senior} specify the age used for the face analyser

|

|

--face-analyser-gender {male,female} specify the gender used for the face analyser

|

|

--face-detector-model {retinaface,yunet} specify the model used for the face detector

|

|

--face-detector-size {160x160,320x320,480x480,512x512,640x640,768x768,960x960,1024x1024} specify the size threshold used for the face detector

|

|

--face-detector-score [0.0-1.0] specify the score threshold used for the face detector

|

|

|

|

face selector:

|

|

--face-selector-mode {reference,one,many} specify the mode for the face selector

|

|

--reference-face-position REFERENCE_FACE_POSITION specify the position of the reference face

|

|

--reference-face-distance [0.0-1.5] specify the distance between the reference face and the target face

|

|

--reference-frame-number REFERENCE_FRAME_NUMBER specify the number of the reference frame

|

|

|

|

face mask:

|

|

--face-mask-types FACE_MASK_TYPES [FACE_MASK_TYPES ...] choose from the available face mask types (choices: box, occlusion, region)

|

|

--face-mask-blur [0.0-1.0] specify the blur amount for face mask

|

|

--face-mask-padding FACE_MASK_PADDING [FACE_MASK_PADDING ...] specify the face mask padding (top, right, bottom, left) in percent

|

|

--face-mask-regions FACE_MASK_REGIONS [FACE_MASK_REGIONS ...] choose from the available face mask regions (choices: skin, left-eyebrow, right-eyebrow, left-eye, right-eye, eye-glasses, nose, mouth, upper-lip, lower-lip)

|

|

|

|

frame extraction:

|

|

--trim-frame-start TRIM_FRAME_START specify the start frame for extraction

|

|

--trim-frame-end TRIM_FRAME_END specify the end frame for extraction

|

|

--temp-frame-format {jpg,png} specify the image format used for frame extraction

|

|

--temp-frame-quality [0-100] specify the image quality used for frame extraction

|

|

--keep-temp retain temporary frames after processing

|

|

|

|

output creation:

|

|

--output-image-quality [0-100] specify the quality used for the output image

|

|

--output-video-encoder {libx264,libx265,libvpx-vp9,h264_nvenc,hevc_nvenc} specify the encoder used for the output video

|

|

--output-video-quality [0-100] specify the quality used for the output video

|

|

--keep-fps preserve the frames per second (fps) of the target

|

|

--skip-audio omit audio from the target

|

|

|

|

frame processors:

|

|

--frame-processors FRAME_PROCESSORS [FRAME_PROCESSORS ...] choose from the available frame processors (choices: face_debugger, face_enhancer, face_swapper, frame_enhancer, ...)

|

|

--face-debugger-items FACE_DEBUGGER_ITEMS [FACE_DEBUGGER_ITEMS ...] specify the face debugger items (choices: bbox, kps, face-mask, score)

|

|

--face-enhancer-model {codeformer,gfpgan_1.2,gfpgan_1.3,gfpgan_1.4,gpen_bfr_256,gpen_bfr_512,restoreformer} choose the model for the frame processor

|

|

--face-enhancer-blend [0-100] specify the blend amount for the frame processor

|

|

--face-swapper-model {blendswap_256,inswapper_128,inswapper_128_fp16,simswap_256,simswap_512_unofficial} choose the model for the frame processor

|

|

--frame-enhancer-model {real_esrgan_x2plus,real_esrgan_x4plus,real_esrnet_x4plus} choose the model for the frame processor

|

|

--frame-enhancer-blend [0-100] specify the blend amount for the frame processor

|

|

|

|

uis:

|

|

--ui-layouts UI_LAYOUTS [UI_LAYOUTS ...] choose from the available ui layouts (choices: benchmark, webcam, default, ...)

|

|

```

|

|

|

|

|

|

Documentation

|

|

-------------

|

|

|

|

Read the [documentation](https://docs.facefusion.io) for a deep dive.

|